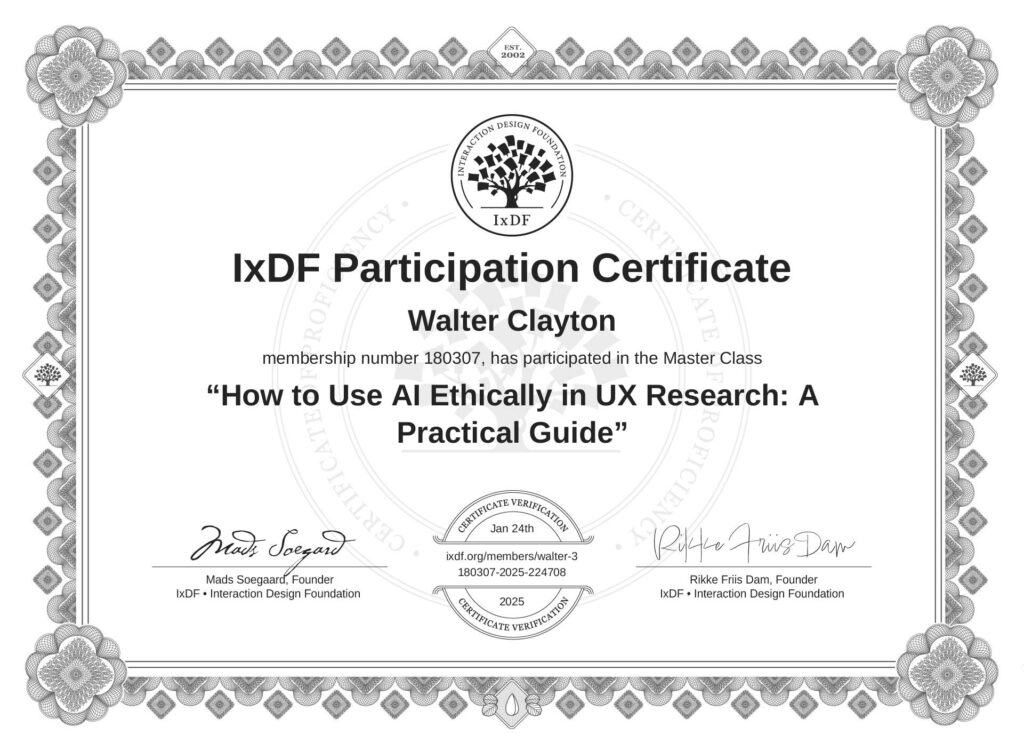

In the world of technology, few topics are as captivating, complex, and impactful as artificial intelligence (AI). As someone passionate about design, UX research, and the role of ethics in shaping the future, I recently attended the “How to Use AI Ethically in UX Research: A Practical Guide” workshop hosted by the Interaction Design Foundation (IxDF) and led by Cory Lebson. The experience left me with a lot to ponder – and some questions I’m still mulling over.

Reflecting on the Workshop: Insights and Questions

The workshop shed light on the importance of AI ethics in UX research. While some guidelines, like those based on GDPR, are already well-established, Cory’s practical demonstrations brought new perspectives.

For instance:

- Using tools like GPT-4 to design research questions.

- Discovering pre-constructed research templates and AI-powered tools like Dovetail to streamline workflows.

It became evident that while AI has enormous potential, we’re still grappling with foundational questions about its role in society. To deepen my understanding, I explored resources like the EU AI Act, which introduced the idea of “Higher risk = stricter rules.” However, I also came across the AI Ethics Guidelines Global Inventory, which highlights a significant gap: enforcement is still in its infancy, with most guidelines being self-regulated. Are we waiting for another Cambridge Analytica moment to take these guidelines seriously?

Key Takeaways

- Ethics are Crucial: AI ethics aim to safeguard individual, societal, and environmental integrity.

- Standards are Still Evolving: Creating consistent and enforceable standards for AI is a complicated and ongoing process.

- Global Focus is Limited: There’s a lack of funding and prioritization for AI ethics globally, making it an afterthought for many until disaster strikes.

These reflections made me think about the “black box” effect – where AI becomes so complex that humans can no longer understand or control it. Transparency and explainability are vital to ensuring that AI remains a tool we can monitor and manage effectively.

Final Thoughts

AI is both a powerful tool and a complex responsibility. As designers, researchers, and technologists, it’s up to us to shape its future. By asking the right questions, establishing clear guidelines, and holding ourselves accountable, we can build an AI-powered world that prioritizes humanity and ethics over profit and power.

I’ll leave you with one question:

How do you think we can balance innovation with responsibility in the age of AI?

Share your thoughts in the comments – I’d love to hear them!

Leave a Reply

You must be logged in to post a comment.